Combining human judgment and data-driven approaches for the development of interpretable models of student behaviors: Applications to computer science education (2020–2024)

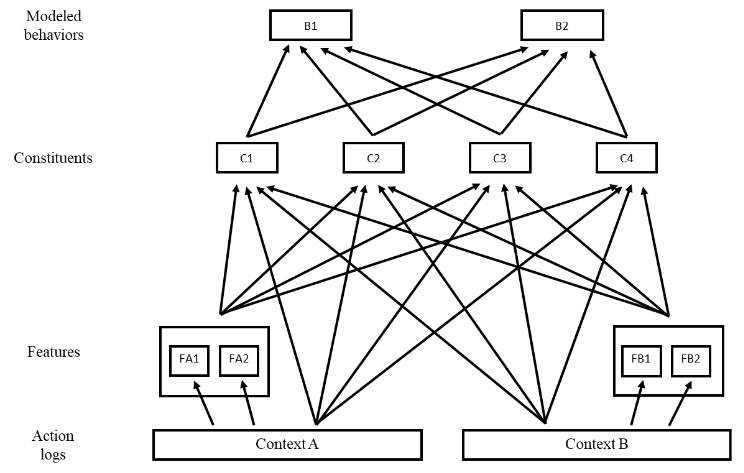

This NSF-funded project formalizes a hybridized approach to student modeling that generates models that are both interpretable by non-experts and robust to the noisiness of human behavior data. It combines knowledge elicitation approaches—which use human experts to develop interpretable models and to filter out noisy information—with data-driven approaches using machine learning algorithms which are often able to discover relationships that are difficult for human experts to explicate. We apply this hybridized approach in the context of computer science education, leveraging the increased interpretability of the models it creates to (1) better understand student debugging behaviors during programming activities, (2) support students in self-reflecting about the strategies they use and develop more efficient debugging strategies, (3) provide instructors with actionable information about their students’ debugging activities and (4) support future computer science teacher in acquiring expertise related to formulating hypotheses about a student’s debugging strategies.

Exploring algorithmic transparency: Interpretability and explainability of educational models

The HEDS lab is investigating the challenge of interpretability that comes with many of the state-of-the-art predictive models in education. We are pursuing three general threads of research: (1) understanding the differences and tradeoffs between using post-hoc explainability vs. intrinsically interpretable methods for transparency, (2) creating intrinsically interpretable machine learning models that retain the advantages of more complex algorithms, and (3) devising metrics and designs to effectively evaluate the interpretability of prediction models. We are interested in collaborating in this space, so feel free to reach out!

Relevant links:

- Human-Centric eXplainable AI in Education Workshop (HEXED), co-organized by members of our lab

- Liu, Q., Pinto, J. D., & Paquette, L. (2024). Applications of explainable AI (XAI) in education

- Pinto, J. D., & Paquette, L. (2024). Towards a unified framework for evaluating explanations

Constraints-based approach to interpretable-by-design modeling

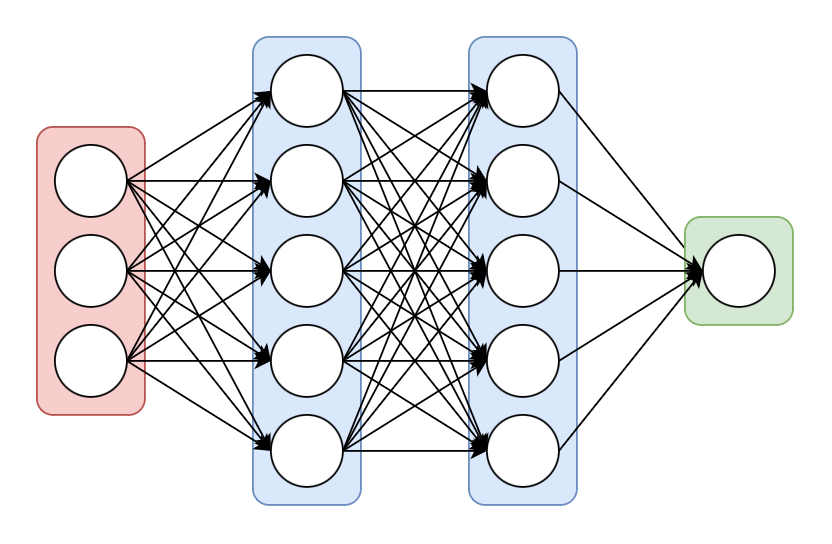

As part of our efforts to explore algorithmic transparency, one approach we are taking is focused on creating a fully interpretable model, meaning that the parameters we extract for our explanations have a clear interpretation, fully capture the model’s learned knowledge about the learner behavior of interest, and can be used to create explanations that are both faithful and intelligible. This project involves three main goals: (1) develop an interpretable neural network, comparing accuracy and issues relevant to interpretability approaches as a whole, (2) evaluate this model’s level of interpretability using a human-grounded evaluation approach, and (3) validate the model’s inner representations and explore some hypothetical advantages of interpretable models, including their use for knowledge discovery. We have achieved the first two goals (see links below for details), and are currently working on the third. We will continue linking relevant publications here as they become available.

Relevant links:

- Pinto, J. D. & Paquette, L. (2025). A constraints-based approach to fully interpretable neural networks for detecting learner behaviors

- Pinto, J. D., Paquette, L., & Bosch, N. (2024). Intrinsically interpretable artificial neural networks for learner modeling

- Pinto, J. D., Paquette, L., & Bosch, N. (2023). Interpretable neural networks vs. expert-defined models for learner behavior detection

INVITE AI Institute (2023-2028)

The HEDS is part of the INVITE Institute (INclusiVe Intelligent Technologies for Education), one of the NSF National AI Research Institutes. INVITE seeks to fundamentally reframe how AI-based educational technologies interact with learners by developing AI techniques to track and promote skills that underlie successful learning and contribute to academic success: persistence, academic resilience, and collaboration. The HEDS Lab primarily focuses on the learner modeling strand of the institute.

Using Data Mining and Observation to Derive an Enhanced Theory of SRL in Science Learning Environments (2016-2021)

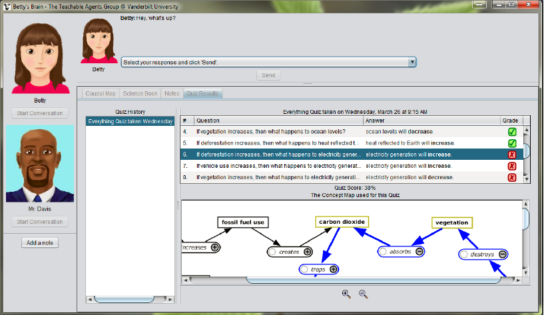

The primary goal for this project was to enhance the theory and measurement of students’ self-regulated learning (SRL) processes during science learning by developing a technology based framework which leverages human expert judgment and machine learning methods to identify key moments during SRL and analyze these moments in depth. These issues are being studied in the context of Betty’s Brain, an open-ended science learning environment that combines learning-by-modeling with critical thinking and problem-solving skills to teach complex science topics. This project used data-driven detectors of student SRL behaviors and emotional states to conduct with in-the-moment qualitative interviews at key moments in the students' interaction with Betty’s Brain.

Improving Collaborative Learning in Engineering Classes Through Integrated Tools (2016-2021)

The goal of this project is to design and study tools that 1) support teaching assistants learning about and implementing collaborative learning and 2) support students engaged in collaborative problem solving activities. In this project, we used log-data produced by students as they work in groups on a tablet computer to develop automated detectors related to their collaboration. These detectors were used to drive automated in-the-moment prompts to guide instructors in supporting their students' collaborative problem solving.